One of the funny things as a consultant in the IT industry is, that you see that everybody is fighting with the same questions and problems.

One of those discussions I encounter everywhere is: How many representations of an entity are needed to get data from the DB to the client (sending domain objects to the client? using DTOs? Are mapped classes the domain model? ...)

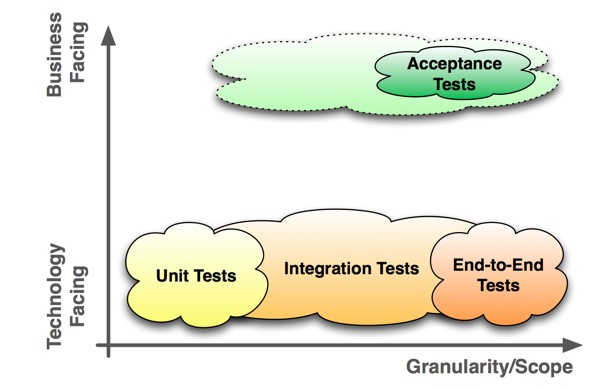

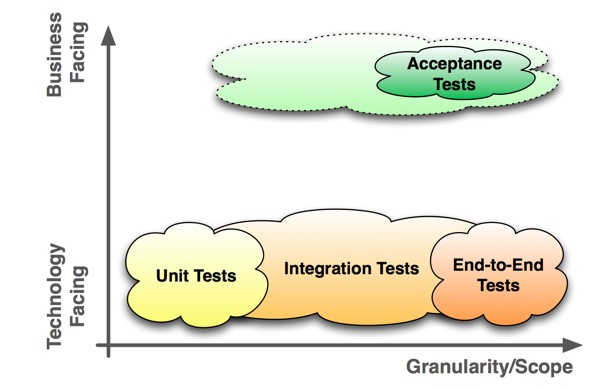

Another famous discussion is the

classifications of automated tests: What are unit-tests, what are integration-tests, what is the difference to end-to-end tests? What are acceptance-tests? What do we need? What is the responsibility of the developers, where do the testers come in? What kind of tests do we write first?

A recent instance of this discussion has popped up on

Ted Neward's Blog:

Integration testing and unit testing.

Based on the

Agile Testing Quadrants I drew a diagram, that explains my understanding of the classification of unit-, integration-, end-to-end tests and acceptance tests:

Unit-, integration- and end-to-end

Unit-, integration- and end-to-end are a classification concerning the

scope/granularity of the test. It described how "large" the unit under test is.

Acceptance is a classification that is orthogonal to the scope/granularity of a test. It describes the fact, that the test gives

relevant feedback about the specified functionality of the system.

Acceptance tests can be set on on different levels of granularity. This means an acceptance test can be a unit-, integration or end-to-end test.

For instance the implementation of a core calculation logic in an insurance application can be tested on unit level. For this core business logic this can be an acceptance test.

Acceptance tests are usually

accessible for the stakeholders in some form. This can be achieved by binding a business readable specification to the test, or by printing a business readable test-trace (see

BDD tools: business readable input vs. business readable output).

In real projects acceptance test usually tend to be on the end-to-end scope. In most cases it is necessary to exercises a lot of components to get a reliable impression about a certain functionality of a system.

However if possible, we should prefer smaller scopes also for acceptance tests, since smaller tests are faster, provide more specific feedback, are more stable and better understandable. If we get valuable feedback concerning acceptance from a test on unit scope, we should be happy.

As in many scenarios, trust is the key: If all your acceptance tests are green and the system does not work anyway. This is an argument to go for full end-to-end testing for acceptance tests.

This is like the different revolutionary groups in Life of Brian: Judean People's Front, People's Front of Judea, Popular Front of Judea ... they all look the same, they all have the same purpose, sometimes they are even the same people ... but the difference is still more important than everything else!

This is like the different revolutionary groups in Life of Brian: Judean People's Front, People's Front of Judea, Popular Front of Judea ... they all look the same, they all have the same purpose, sometimes they are even the same people ... but the difference is still more important than everything else!